UTXO is geek-speak for “unspent transaction

output.” Unspent transaction outputs are important because fully validating

nodes use them to figure out whether or not transactions are valid– all inputs

to a transaction must be in the UTXO database for it to be valid.

UTXO

- Unique identifier of the transaction

- Position of this UTXO in transaction output list

- Value or Amount

- Optional script

Transaction contains

- Reference number of current transaction

- Reference to one or more input UTXO

- Reference to one or more output UTXO newly generated

- Total input amount and output amount

Transaction hashes contain

- Hash of the current block

- Hash of previous block

- Hash of next block

- Merkle root hash of the block

Double spending problem

The

risk that a digital currency can be spent twice. Double-spending is a potential problem

unique to digital currencies because digital information can be reproduced

relatively easily.

Bitcoin solution

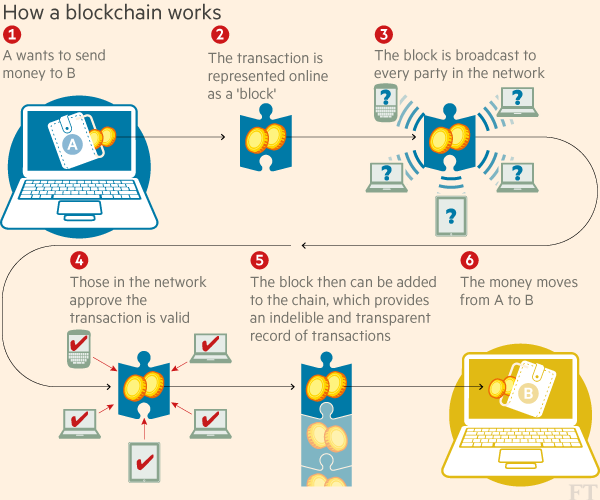

We propose a

solution to the double-spending problem using a peer-to-peer network. The

network timestamps transactions by hashing them into an ongoing chain of

hash-based proof-of-work, forming a record that cannot be changed without

redoing the proof-of-work.

The longest chain

not only serves as proof of the sequence of events witnessed, but proof that it

came from the largest pool of CPU power

As long as a

majority of CPU power is controlled by nodes that are not cooperating to attack

the network, they'll generate the longest chain and outpace attackers.

the chain stitches

that data into encrypted blocks that can never be modified and scatters the

pieces across a worldwide network of distributed computers or "nodes.

Basic operations: what Miners do?

- Validation of Transactions

- Gathering transactions for a block

- Broadcasting valid transactions and blocks

- Consensus of next block creation / acceptance

- Chaining blocks

Transaction 0 Index

0 of the confirmed block

- Created by the miner of the block

- Does not have input UTXO

- Only has output UTXO (special UTXO)

- It generates Coinbase transaction (miner's fee)

- Miner fee - 12.5 BTC

A standard

transaction output can be unlocked with the private key associated with the

receiving address. Addresses and their associated public/private key pairs will

be covered later in the series. For now, we are concerned with the output

amount only.